PCN Lab: Difference between revisions

No edit summary |

No edit summary |

||

| (35 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

:''Warning: This entire article is written in the first-person ([[Mark Kamichoff|Mark Kamichoff]]'s) point of view'' | |||

[[Image:rack.jpg|thumb|PCN Lab Rack]]The [[PCN]] lab is a network extension of the Prolixium Communications Network composed of Juniper vMX, Cumulus VX, MikroTik RouterOS, Cisco NX-OSv, Cisco IOS-XRv, and Cisco IOSv systems, with a few pieces of real equipment (SRX and ScreenOS firewalls). It has been traditionally used by [[Mark Kamichoff]] to test and break network things but now it mostly sits idle. | |||

[[ | |||

[[ | |||

== Random Lab Setups == | == Random Lab Setups == | ||

The current version is | <div class="mw-collapsible mw-collapsed">Deprecated lab setups are hidden by default. Click '''expand''' to see them.<div class="mw-collapsible-content">The current version is 4.0, although the lab environment is always changing. | ||

=== Lab 1.0 === | === Lab 1.0 === | ||

My previous lab setup involved a basic Dynamips setup, with the goal of learning and toying with MPLS | My previous lab setup involved a basic [[Dynamips]] setup, with the goal of learning and toying with [[MPLS]], [[multicast]], and other weird stuff. The lab consisted of: | ||

* 4x emulated Cisco 7206VXR routers | |||

* 1x Juniper M40 (Olive) router | |||

* 1x Debian GNU/Linux virtual machine | |||

Lab | [[file:lab.png|280px|PCN Lab 1.0]] | ||

All routers ran MPLS with LDP | All routers ran MPLS with [[LDP]], which allows for dynamic creation of [[LSP|LSPs]] and much less configuration than [[RSVP]]-TE. Latency to the end host, sinc was pretty horrible: roughly 150ms from the [[LAN]]. Bandwidth was limited to around 50KB/sec, since all Cisco routers, along with the VM, were emulated on a single Dell Dimension 2350 w/a Celeron processor. The Juniper box was the only real piece of hardware. Still, it's pretty fun. | ||

=== Lab 2.0 === | === Lab 2.0 === | ||

After I upgraded my main PC to a Core 2 Extreme processor, I realized I had a spare Athlon64 3200+ system that could run tons of Dynamips | After I upgraded my main PC to a Core 2 Extreme processor, I realized I had a spare Athlon64 3200+ system that could run tons of Dynamips simulators without breaking a sweat. Unfortunately the 0.2.7 release of Dynamips consumes a ton more CPU than previous versions, so I'm only able to run five simulators without the system becoming too sluggish to use. I picked up a new box, [[vega]], which handles the load just fine. I built configurations for 9 routers and 5 VMware hosts, plus a Juniper Olive (real machine). Here's a diagram of the setup: | ||

[[file:newlab.png|280px|Lab 2.0 environment]] | |||

Yep, just a bit of a Star Trek theme. | Yep, just a bit of a [[Star Trek]] theme. | ||

The lab runs | The lab runs multiprotocol BGP (IPv6 and IPv4), OSPFv2, OSPFv3, and LDP (MPLS enabled on all transit links). Connectivity to the outside production network is provided via relativity, a Juniper Olive, which is connected to a dedicated [[Fast Ethernet]] on starfire, my core router. relativity and starfire have an EBGP peering session, with IPv4 and IPv6 address families, and do a form of conditional mutual route redistribution (it's not that bad, really..). The lab learns a default route via BGP (0/0 and ::/0), as well as specific prefixes used on the production network, while the production network learns about the lab networks from OSPFv2/3 redistributed into BGP, which is then redistributed back into OSFPv2/3. No, seriously, it's not too messy! This is part of the reason why I have a JUNOS box doing all the route redistribution: routing policies are a cinch to configure. Of course, there's only one way into the lab, so I don't see how a loop can ever form... | ||

Here's a breakdown of all the routers: | Here's a breakdown of all the routers: | ||

* relativity: Juniper Olive (physical box), provides lab connectivity to the outside world | |||

* defiant: Cisco 7200 PE router, pins up an L3 VPN between sisko and janeway | |||

* voyager: Cisco 7200 PE router (same) | |||

* cardassia: Cisco 7200 P router, also BGP RR | |||

* vorta: Juniper Olive (Qemu) | |||

* sisko: Cisco 3745 CE router for VRF PROLIXIUM | |||

* janeway: Cisco 3745 CE router for other end of VRF PROLIXIUM | |||

* excelsior: Cisco 7200 PE router for EoMPLS between sulu and picard | |||

* enterprise: Cisco 7200 PE router for EoMPLS / upstream connectivity | |||

* sulu: Cisco 3745 CE router providing connectivity to serendipity | |||

* picard: Cisco 3745 CE router providing connectivity to iridium | |||

Breakdown of all the VMware hosts: | Breakdown of all the VMware hosts: | ||

* arcadia: Red Hat Enterprise Linux 5, L3 VPN participant | |||

* sinc: Debian GNU/Linux, L3 VPN participant | |||

* iridium: Red Hat Enterprise Linux 5, EoMPLS testing | |||

* serendipity: Debian GNU/Linux, EoMPLS testing | |||

* cation: FreeBSD 6.2-STABLE, Quagga testing (removed) | |||

Lab goals: | Lab goals: | ||

* Create a MPLS 2547bis L3 VPN w/Internet access | |||

* Debug FreeBSD kernel panics with Quagga (read here) | |||

* Create a EoMPLS instance | |||

* Deploy DHCP-PD | |||

* Test MLD, MRT6, and PIM-SM for IPv6 | |||

The first goal was completed by connecting end hosts arcadia and sinc together, and providing a default route out to the Internet via a separate link on the CE router (sisko). I setup a VRF for the two small networks, and configured the PE and CE routers to talk BGP, using AS64514 for the client network (VRF PROLIXIUM). Internet access is provided via some trickery of BGP into OSPF and a static route (default-information originate via BGP on AS64514) pointing up to defiant's FastEthernet4/0 interface. Although stuff works, and is latent as heck, there are possibly some MTU issues with sinc that need to be worked out. | The first goal was completed by connecting end hosts arcadia and sinc together, and providing a default route out to the Internet via a separate link on the CE router (sisko). I setup a VRF for the two small networks, and configured the PE and CE routers to talk BGP, using AS64514 for the client network (VRF PROLIXIUM). Internet access is provided via some trickery of BGP into OSPF and a static route (default-information originate via BGP on AS64514) pointing up to defiant's FastEthernet4/0 interface. Although stuff works, and is latent as heck, there are possibly some MTU issues with sinc that need to be worked out. | ||

| Line 77: | Line 63: | ||

Here's a diagram: | Here's a diagram: | ||

MPLS | [[file:l3vpn.png|280px|PCN Lab with MPLS VPN]] | ||

The second goal was somewhat achieved. I placed cation on a small subnet hanging off of defiant. defiant doesn't announce this network into any routing protocols, and performs NAT/PAT for this network. I then pinned up two OpenVPN tunnels to the public IPs of starfire and nonce, with the goal of simulating an unstable network out on the Internet, hoping to see FreeBSD crash when multiple neighbor adjacencies flap. Didn't blink, but I'm still watching. | The second goal was somewhat achieved. I placed cation on a small subnet hanging off of defiant. defiant doesn't announce this network into any routing protocols, and performs NAT/PAT for this network. I then pinned up two OpenVPN tunnels to the public IPs of starfire and nonce, with the goal of simulating an unstable network out on the Internet, hoping to see FreeBSD crash when multiple neighbor adjacencies flap. Didn't blink, but I'm still watching. | ||

| Line 92: | Line 78: | ||

[[file:lab30.png|480px|Lab 3.0]] | [[file:lab30.png|480px|Lab 3.0]] | ||

=== Lab 3.1 === | |||

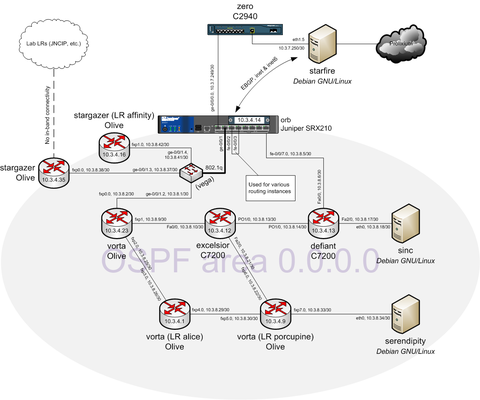

I picked up a Juniper SRX210 and used it to replace the Juniper J2320. I also added in stargazer and affinity via a 802.1q trunk: | |||

[[file:Lab31.png|480px|Lab 3.1]] | |||

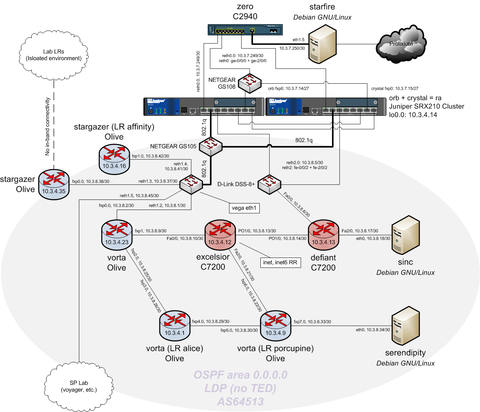

=== Lab 3.2 === | |||

Main lab isn't much different (added two SRXes, though): | |||

[[file:Lab32.png|480px|Lab 3.2]] | |||

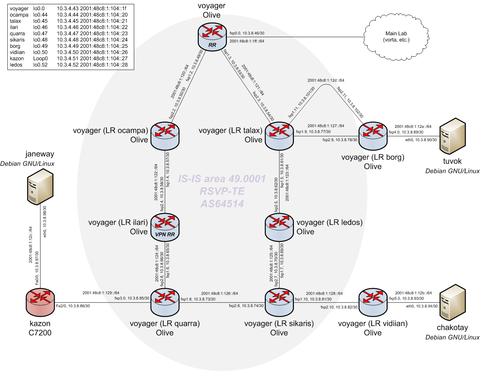

However, I added another autonomous system to simulate a service provider environment w/RSVP-TE, IS-IS, and L3VPNs: | |||

[[file:splab.png|480px|Service Provider Lab]] | |||

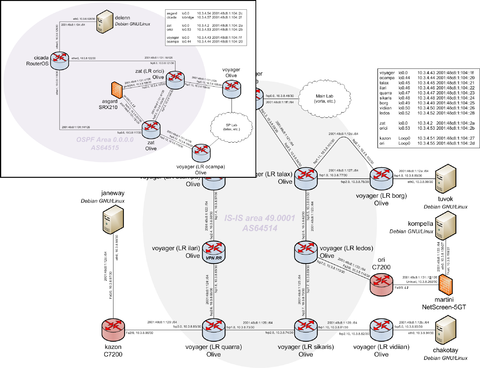

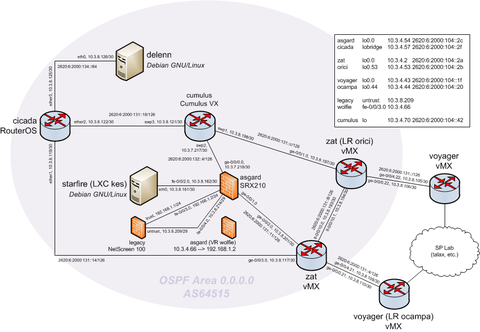

=== Lab 3.3 === | |||

I also added another AS hanging off the SP lab. This was to get some experience with inter-AS MPLS VPNs. asgard's ge-0/0/1 is connected to ori's Fa0/0 interface, which virtually connects martini's Untrust interface with zat's fxp4. Got it? Great! | |||

cicada was also added, which is an x86 VM running Mikrotik's RouterOS. | |||

[[file:spvpnlab.png|480px|Lab 3.3]] | |||

=== Lab 3.4 === | |||

This has been updated to reflect the new Juniper EX2200-C switch and a small EIGRP network. | |||

[[file:lab34.png|480px|Lab 3.4]]</div></div> | |||

=== Lab 4.0 (current) === | |||

[[Image:rack-upper.jpg|thumb|PCN Lab Physical Network Devices]]I finally swapped out the junky NETGEAR switches for a Foundry FLS624. I also converted the Olives to vMXes. | |||

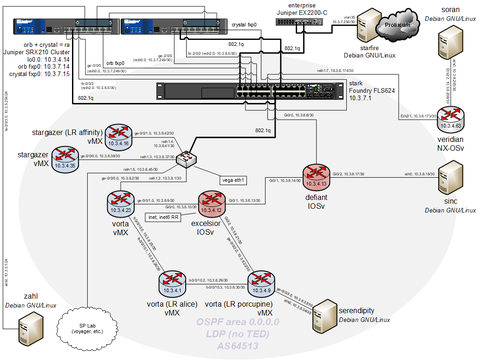

Main lab: | |||

[[file:lab40.png|480px|Lab 4.0]] | |||

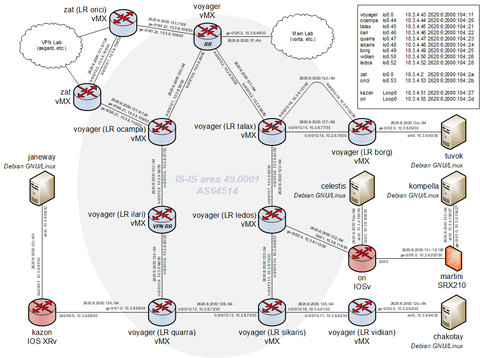

Service provider lab: | |||

[[file:lab40-splab.png|480px|Service Provider Lab 4.0]] | |||

Service provider VPN lab: | |||

[[file:lab40-vpnlab.png|480px|Service provider VPN lab 4.0]] | |||

== LR-only Environments == | |||

<div class="mw-collapsible mw-collapsed">This content is historical. Click '''expand''' to see it.<div class="mw-collapsible-content">To study for the [[JNCIP-M]] (and [[JNCIE-M]], now!) exam and put together a few simulations for work, I use the [[stargazer]] Qemu Olive instance with a bunch of isolated LRs. A couple examples are listed below. | |||

=== JNCIP-M Lab === | |||

There seem to be three lab variants in the JNCIP-M study material. All of them are configured on stargazer, a Juniper Olive running in [[QEMU]]: | |||

==== OSPF ==== | |||

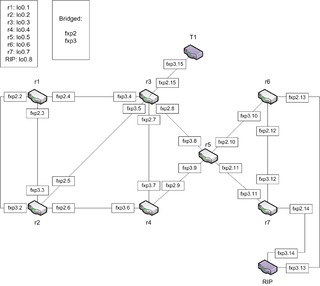

This lab setup uses two interfaces that connect from r6 to [[OSPF]] and r7 to OSPF: | |||

[[file:JNCIP_OSPF.png|320px|JNCIP-M lab with OSPF router]] | |||

==== RIP ==== | |||

This lab setup uses two interfaces that connect r6, r7, and RIP via a switch: | |||

[[file:JNCIP_RIP.png|320px|JNCIP-M lab with RIP router]] | |||

The switch is actually a Linux bridge with the tap interfaces as members. | |||

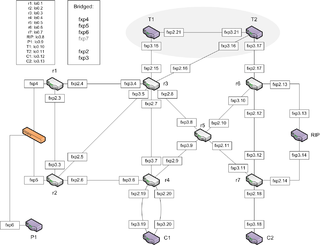

==== EBGP ==== | |||

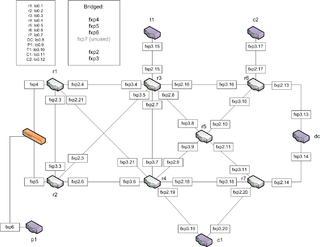

Chapter 6 focuses on EBGP, and introduces a couple new routers. The switch is moved to the VRRP segment between r1 and r2 (connects to P1) and T1, T2, C1, and C2 are introduced: | |||

[[file:JNCIP_EBGP.png|320px|JNCIP-M EBGP lab]] | |||

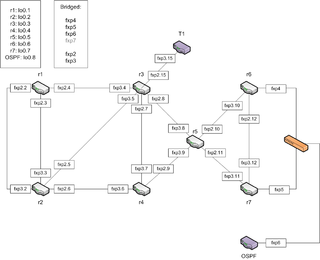

=== JNCIE-M Lab === | |||

There will be a couple variants of this lab environment. Right now there is only one. | |||

==== Generic ==== | |||

[[file:JNCIElab_generic.png|320px|JNCIP-E lab]]</div></div> | |||

Latest revision as of 05:17, 23 December 2019

- Warning: This entire article is written in the first-person (Mark Kamichoff's) point of view

The PCN lab is a network extension of the Prolixium Communications Network composed of Juniper vMX, Cumulus VX, MikroTik RouterOS, Cisco NX-OSv, Cisco IOS-XRv, and Cisco IOSv systems, with a few pieces of real equipment (SRX and ScreenOS firewalls). It has been traditionally used by Mark Kamichoff to test and break network things but now it mostly sits idle.

Random Lab Setups

Lab 1.0

My previous lab setup involved a basic Dynamips setup, with the goal of learning and toying with MPLS, multicast, and other weird stuff. The lab consisted of:

- 4x emulated Cisco 7206VXR routers

- 1x Juniper M40 (Olive) router

- 1x Debian GNU/Linux virtual machine

All routers ran MPLS with LDP, which allows for dynamic creation of LSPs and much less configuration than RSVP-TE. Latency to the end host, sinc was pretty horrible: roughly 150ms from the LAN. Bandwidth was limited to around 50KB/sec, since all Cisco routers, along with the VM, were emulated on a single Dell Dimension 2350 w/a Celeron processor. The Juniper box was the only real piece of hardware. Still, it's pretty fun.

Lab 2.0

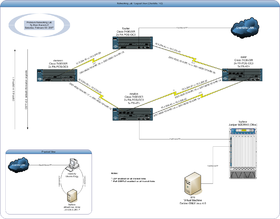

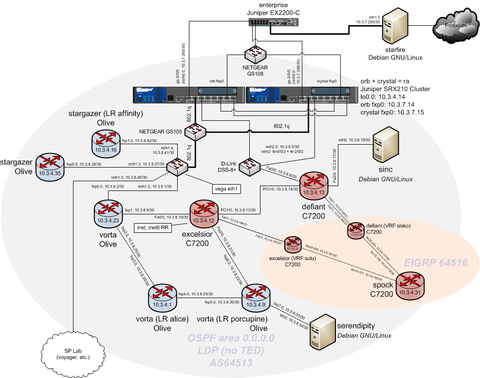

After I upgraded my main PC to a Core 2 Extreme processor, I realized I had a spare Athlon64 3200+ system that could run tons of Dynamips simulators without breaking a sweat. Unfortunately the 0.2.7 release of Dynamips consumes a ton more CPU than previous versions, so I'm only able to run five simulators without the system becoming too sluggish to use. I picked up a new box, vega, which handles the load just fine. I built configurations for 9 routers and 5 VMware hosts, plus a Juniper Olive (real machine). Here's a diagram of the setup:

Yep, just a bit of a Star Trek theme.

The lab runs multiprotocol BGP (IPv6 and IPv4), OSPFv2, OSPFv3, and LDP (MPLS enabled on all transit links). Connectivity to the outside production network is provided via relativity, a Juniper Olive, which is connected to a dedicated Fast Ethernet on starfire, my core router. relativity and starfire have an EBGP peering session, with IPv4 and IPv6 address families, and do a form of conditional mutual route redistribution (it's not that bad, really..). The lab learns a default route via BGP (0/0 and ::/0), as well as specific prefixes used on the production network, while the production network learns about the lab networks from OSPFv2/3 redistributed into BGP, which is then redistributed back into OSFPv2/3. No, seriously, it's not too messy! This is part of the reason why I have a JUNOS box doing all the route redistribution: routing policies are a cinch to configure. Of course, there's only one way into the lab, so I don't see how a loop can ever form...

Here's a breakdown of all the routers:

- relativity: Juniper Olive (physical box), provides lab connectivity to the outside world

- defiant: Cisco 7200 PE router, pins up an L3 VPN between sisko and janeway

- voyager: Cisco 7200 PE router (same)

- cardassia: Cisco 7200 P router, also BGP RR

- vorta: Juniper Olive (Qemu)

- sisko: Cisco 3745 CE router for VRF PROLIXIUM

- janeway: Cisco 3745 CE router for other end of VRF PROLIXIUM

- excelsior: Cisco 7200 PE router for EoMPLS between sulu and picard

- enterprise: Cisco 7200 PE router for EoMPLS / upstream connectivity

- sulu: Cisco 3745 CE router providing connectivity to serendipity

- picard: Cisco 3745 CE router providing connectivity to iridium

Breakdown of all the VMware hosts:

- arcadia: Red Hat Enterprise Linux 5, L3 VPN participant

- sinc: Debian GNU/Linux, L3 VPN participant

- iridium: Red Hat Enterprise Linux 5, EoMPLS testing

- serendipity: Debian GNU/Linux, EoMPLS testing

- cation: FreeBSD 6.2-STABLE, Quagga testing (removed)

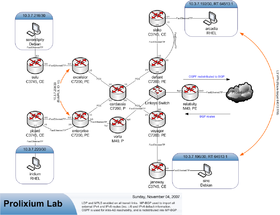

Lab goals:

- Create a MPLS 2547bis L3 VPN w/Internet access

- Debug FreeBSD kernel panics with Quagga (read here)

- Create a EoMPLS instance

- Deploy DHCP-PD

- Test MLD, MRT6, and PIM-SM for IPv6

The first goal was completed by connecting end hosts arcadia and sinc together, and providing a default route out to the Internet via a separate link on the CE router (sisko). I setup a VRF for the two small networks, and configured the PE and CE routers to talk BGP, using AS64514 for the client network (VRF PROLIXIUM). Internet access is provided via some trickery of BGP into OSPF and a static route (default-information originate via BGP on AS64514) pointing up to defiant's FastEthernet4/0 interface. Although stuff works, and is latent as heck, there are possibly some MTU issues with sinc that need to be worked out.

Here's a diagram:

The second goal was somewhat achieved. I placed cation on a small subnet hanging off of defiant. defiant doesn't announce this network into any routing protocols, and performs NAT/PAT for this network. I then pinned up two OpenVPN tunnels to the public IPs of starfire and nonce, with the goal of simulating an unstable network out on the Internet, hoping to see FreeBSD crash when multiple neighbor adjacencies flap. Didn't blink, but I'm still watching.

EoMPLS between enterprise and excelsior (connecting picard and sulu, respectively) is complete. Since sulu and picard are to be treated as real customers (perhaps some business class service), IPv4 static routes for their prefixes are pointed down to picard. Picard then runs OSPFv2 with sulu, and redistributes a default route.

DHCP-PD was setup between picard (RR) and enterprise (DR) for a little while. It seemed to work well, and didn't require much administrative overhead on either the provider or customer's side. I hope that some ISPs will start using this in the future.

The multicast testing (PIM-SM, et al) is starting outside the lab, and on starfire, my main router, and relativity, the Juniper Olive.

Lab 3.0

I picked up a real Juniper J2320 router on eBay to help studying for the Juniper enterprise routing exams. I put it in the laundry room along with an HP box (vega) to run KVM and dynamips. Here's a diagram (more to follow, later):

Lab 3.1

I picked up a Juniper SRX210 and used it to replace the Juniper J2320. I also added in stargazer and affinity via a 802.1q trunk:

Lab 3.2

Main lab isn't much different (added two SRXes, though):

However, I added another autonomous system to simulate a service provider environment w/RSVP-TE, IS-IS, and L3VPNs:

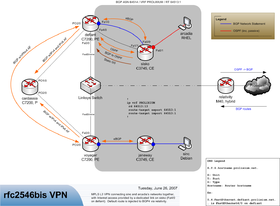

Lab 3.3

I also added another AS hanging off the SP lab. This was to get some experience with inter-AS MPLS VPNs. asgard's ge-0/0/1 is connected to ori's Fa0/0 interface, which virtually connects martini's Untrust interface with zat's fxp4. Got it? Great!

cicada was also added, which is an x86 VM running Mikrotik's RouterOS.

Lab 3.4

This has been updated to reflect the new Juniper EX2200-C switch and a small EIGRP network.

Lab 4.0 (current)

I finally swapped out the junky NETGEAR switches for a Foundry FLS624. I also converted the Olives to vMXes.

Main lab:

Service provider lab:

Service provider VPN lab:

LR-only Environments

JNCIP-M Lab

There seem to be three lab variants in the JNCIP-M study material. All of them are configured on stargazer, a Juniper Olive running in QEMU:

OSPF

This lab setup uses two interfaces that connect from r6 to OSPF and r7 to OSPF:

RIP

This lab setup uses two interfaces that connect r6, r7, and RIP via a switch:

The switch is actually a Linux bridge with the tap interfaces as members.

EBGP

Chapter 6 focuses on EBGP, and introduces a couple new routers. The switch is moved to the VRRP segment between r1 and r2 (connects to P1) and T1, T2, C1, and C2 are introduced:

JNCIE-M Lab

There will be a couple variants of this lab environment. Right now there is only one.

Generic