Prolixium Communications Network: Difference between revisions

| Line 59: | Line 59: | ||

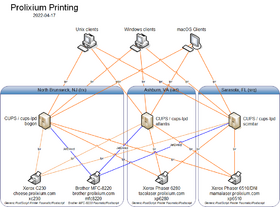

The whole printing/CUPS/lpd setup is mostly an annoyance. Most people would want to run CUPS on every Unix client on the network. Mark Kamichoff believes it's better to have a lightweight client send a PostScript file via lpd to a CUPS server rather than sending a huge RAW raster stream across the network and have both the client and server do print processing. Bonjour connection is just horrid. See the diagram to the bottom: | The whole printing/CUPS/lpd setup is mostly an annoyance. Most people would want to run CUPS on every Unix client on the network. Mark Kamichoff believes it's better to have a lightweight client send a PostScript file via lpd to a CUPS server rather than sending a huge RAW raster stream across the network and have both the client and server do print processing. Bonjour connection is just horrid. See the diagram to the bottom: | ||

[[file: | [[file:printing.png|280px|PCN Printing Setup]] | ||

== History == | == History == | ||

Revision as of 03:31, 27 May 2009

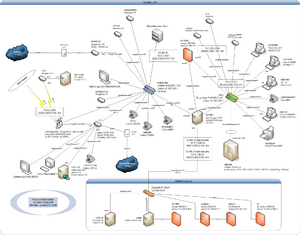

The Prolixium Communications Network (known also as PCN, mynet, My Network, and Prolixium .NET) is a collection of small, geographically disperse, computer networks that provide IPv4 and IPv6, VPN, and VoIP transport to the Kamichoff family. Owned and operated solely by Mark Kamichoff, PCN often serves as a testbed for various network experiments. The majority of the PCN nodes are connected via residential data services (cable modem), while some located in data centers have Fast Ethernet connections to the Internet.

Current State

Overview

As of May 8, 2009, PCN is composed of several networks along the east coast of the United States, connected via OpenVPN and 6in4 tunnels:

- North Brunswick, NJ (home): nat.prolixium.com on HFC via Optimum Online

- New York, NY (vox): dax.prolixium.com on Fast Ethernet via Voxel dot Net

- Tampa, FL (sago): nonce.prolixium.com on Fast Ethernet via Sago Networks

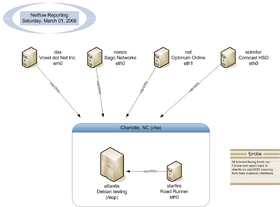

- Charlotte, NC (cha): starfire.prolixium.com on HFC via Road Runner

- Sarasota, FL (srst): scimitar.prolixium.com on HFC via Comcast

- Carteret, NJ (car): tachyon.prolixium.com on HFC via Comcast

Each site has multiple (network is almost fully-meshed) OpenVPN tunnels to other locations, each with a 6to4 tunnel inside, providing both IPv4 and IPv6 communications with data protection and security. Quagga's ospfd, ospf6d, and bgpd are used in the production network (the term production is relative) on commodity PC hardware, while the Charlotte site also utilizes Juniper NetScreen and SSG firewalls.

Routing

The routing infrastructure consists of several autonomous systems, taken from the IANA-allocated private range: 64512 through 65534. Each site runs IBGP, possibly with a route reflector, and its own IGP for local next-hop resolution. EBGP is used between sites and peering connections. IPv4 Internet connectivity for each site is achieved by advertisement of default routes from machines performing NAT. The lab is connected to starfire in Charlotte (cha). The PCN used to use one large OSPF area: no EGP. It was converted to a BGP confederation setup, then reconverted to its current state.

IPv6 Connectivity

IPv6 connectivity is provided by dax.prolixium.com, through a tunnel to OCCAID in Newark, NJ (<1ms away from dax!). The IPv6 default (::/0) is learned by the rest of the network via BGP on dax.prolixium.com. dax.prolixium.com runs OpenBSD's pf, which regulates inbound and outbound IPv6 traffic, through stateful inspection.

DNS

DNS is somewhat tricky, but handled nicely by BIND9's views function. PCN has two external nameservers, and four internal ones, all which perform zone transfers from the master nameserver, ns3.antiderivative.net. antiderivative.net is used for all NS records, as well as glue records at the GTLD servers. The internal nameservers are ns{1-4} and external ones are ns{2,3}. Each zone has two views, internal and external, and a common file that is included in both views (SOA, etc.). The zones include the following:

- Internal view, answering to 10/8, 172.16/12, and 192.168/16 addresses

- 3.10.in-addr.arpa. and 3.16.172.in-addr.arpa. reverse zones

- prolixium.com, prolixium.net, kamichoff.com, and antiderivative.net's internal A/CNAME records

- External view, answering to everything !RFC1918

- prolixium.com, prolixium.net, kamichoff.com, and antiderivative.net's external A/CNAME records

- Common information, answering for all hosts

- 180/30.189.9.69.in-addr.arpa., 232/29.186.9.69.in-addr.arpa, and 6.d.a.8.0.7.4.0.1.0.0.2.ip6.arpa. reverse zones

- prolixium.com, prolixium.net, kamichoff.com, (etc.) and antiderivative.net's common MX records

Previously, the Xicada DNS Service (developed by Mark Kamichoff) kept track of all the forward delegations as well as IPv4 reverse delegations on Xicada. The administrator of each node enumerated their zones into a web form, and then configured their DNS server to pull down a forwarders definition for all Xicada zones. It supported BIND and djbdns, but also outputted a CSV file if someone decided to use another DNS server. It was originally intended that each DNS server should pull down a fesh copy of the forwarders definition file nightly, but there were really no rules.

Mark Kamichoff has a policy on his network to have DNS entries (includes A, AAAA, and PTR) for each and every active IP address. If a host is offline, the DNS records should be immediately expunged. This precludes the requirement of a host management system or a collection of poorly-maintained spreadsheets. If an IP is needed, the PTR should be checked. All DHCP-assigned IP addresses are created via {side ID}-{lastoctet}.prolixium.com. Again, no confusion. DNS itself is a database, so why not use it?

All transit links on PCN are addressed using the prolixium.net domain. The format is {unit/VLAN}.{interface}.{host}.prolixium.net. For example, the xl1 interface on starfire would be: xl1.starfire.prolixium.net. There is a collection of DNS entries for every IPv4 and IPv6 transit link. There is not one hop in my network which has no PTR record (or a PTR record w/out a corresponding A or AAAA record). Each router has a loopback interface with IPv4 and IPv6 addresses (if supported).

Charlotte-Specific Setup

The network setup in Charlotte is slightly different from the other sites, where there is one router, and all Internet and WAN traffic leaves through that host. Road Runner provides three public IP addresses, which have been assigned to:

- A Juniper Networks NetScreen-5GT (einstein)

- A Juniper Networks SSG 5 Wireless (e)

- A Linux router (starfire)

starfire is the core router with 3x Fast Ethernet and 2x Gigabit Ethernet interfaces. VPN traffic leaves starfire, but all non-RFC1918 traffic is balanced via L4 protocols between the two firewalls. I also have a Netflow collector running on atlantis, which is depicted in the drawing below:

Previously, the NetFlow collector ran ntop, but this was uninstalled due to instability.

The whole printing/CUPS/lpd setup is mostly an annoyance. Most people would want to run CUPS on every Unix client on the network. Mark Kamichoff believes it's better to have a lightweight client send a PostScript file via lpd to a CUPS server rather than sending a huge RAW raster stream across the network and have both the client and server do print processing. Bonjour connection is just horrid. See the diagram to the bottom:

History

- Warning: This section is written in the first-person (Mark Kamichoff's) point of view

After joining the [Xicada network back at RPI, I decided to continue linking all of my networks and sites together via various VPN technologies. At first, the network was just a simple VPN between my network at home and a few computers in my dorm room at RPI. The connection tunnelled through RPI's firewall like a knife through warm butter, using OpenVPN's UDP encapsulation mode. Actually, a site to site UDP tunnel was the only thing OpenVPN offered, back then. My router at RPI was a blazing-fast Pentium 166MHz box running Debian GNU/Linux. At that point, my Xicada tunnels were terminated on another box I found in the trash, an old AMD K6-300, which eventually ran FreeBSD 4.

The network quickly started expanding, and I was able to move the K6-300 box (starfire) into the ACM's lab, which was given a 100mbit link, in the basement of the DCC. At this point in time, my network had three sites: home, the lab, and my dorm room. Since I didn't stick around RPI during most summers, I reterminated the Xicada links on starfire, since it sported a more permanent link.

Shortly after starfire was moved to the lab, I started toying with IPv6, and acquired a tunnel via Freenet6 (now Hexago, since they're actually trying to sell products, or something). RPI's firewall wouldn't allow IP protocol 41 through the firewall, and my attempts at getting this opened up for my IP failed. So, I terminated the IPv6 tunnel on my box at home, which sat on Optimum Online. Freenet6 gave me a /48 block out of the 3ffe::/16 6bone space, and I started distributing /64's out to all of my LAN segments. I started running Zebra's OSPFv3 daemon, and realized it was buggy as all get out. It mostly worked, though. Since Freenet6 gave me an ip6.int. delegation, I spent some time applying tons of patches to djbdns, my DNS server of choice, back then. After tons of patching, I got IPv6 support, which was fairly neat at the time. What did I use this new-found IPv6 connectivity for? IRC and web site hosting. www.prolixium.com has had an AAAA record since at least 2003.

Sometime in 2003 (I forget when), I moved my IPv6 tunnel to BTExact, British Telecom's free tunnel broker that actually gave out non-6bone /48's and ip6.arpa. DNS delegations. I quickly moved to them, and enjoyed quicker speeds than Freenet6 for about a year. Of course, after a year, my parents had a power outage at home, and my server lost the IP it had with OOL for the past two years. BTExact, at that time, had frozen their tunnel broker service, and didn't allow any modifications or new tunnels to be created. I went back to Freenet6, who had changed to 2001::/16 space.

After leaving RPI, and getting a job, I decided to purchase a dedicated server from SagoNet. I extended my network down to Tampa, FL, where the server was located.

Fast-forwarding to the present day, I currently have five sites, and an IPv6 tunnel to a North American broker, provided by Hurricane Electric. Almost every host on the network is IPv6-aware, and the IPv6 connectivity is controlled by pf, running on a dedicated server (dax) in New York, NY.

Xicada connectivity at this point has been terminated, due to lack of interest.

Applications

App foo.

Lab

- Main Article: PCN Lab

Lab foo.